Tencent Keen Security Lab Proves Tesla Autopilot Can be Tricked

Elon Musk commended Tencent Keen Security Lab on their work of finding flaws in Tesla’s driver assistance system. The lab found a way to trick a Tesla Model S into entering the lane of oncoming traffic by simply placing stickers on the road.

SEE ALSO: Tencent Pony Ma Highlights the Future of the Industrial Internet During IT Summit

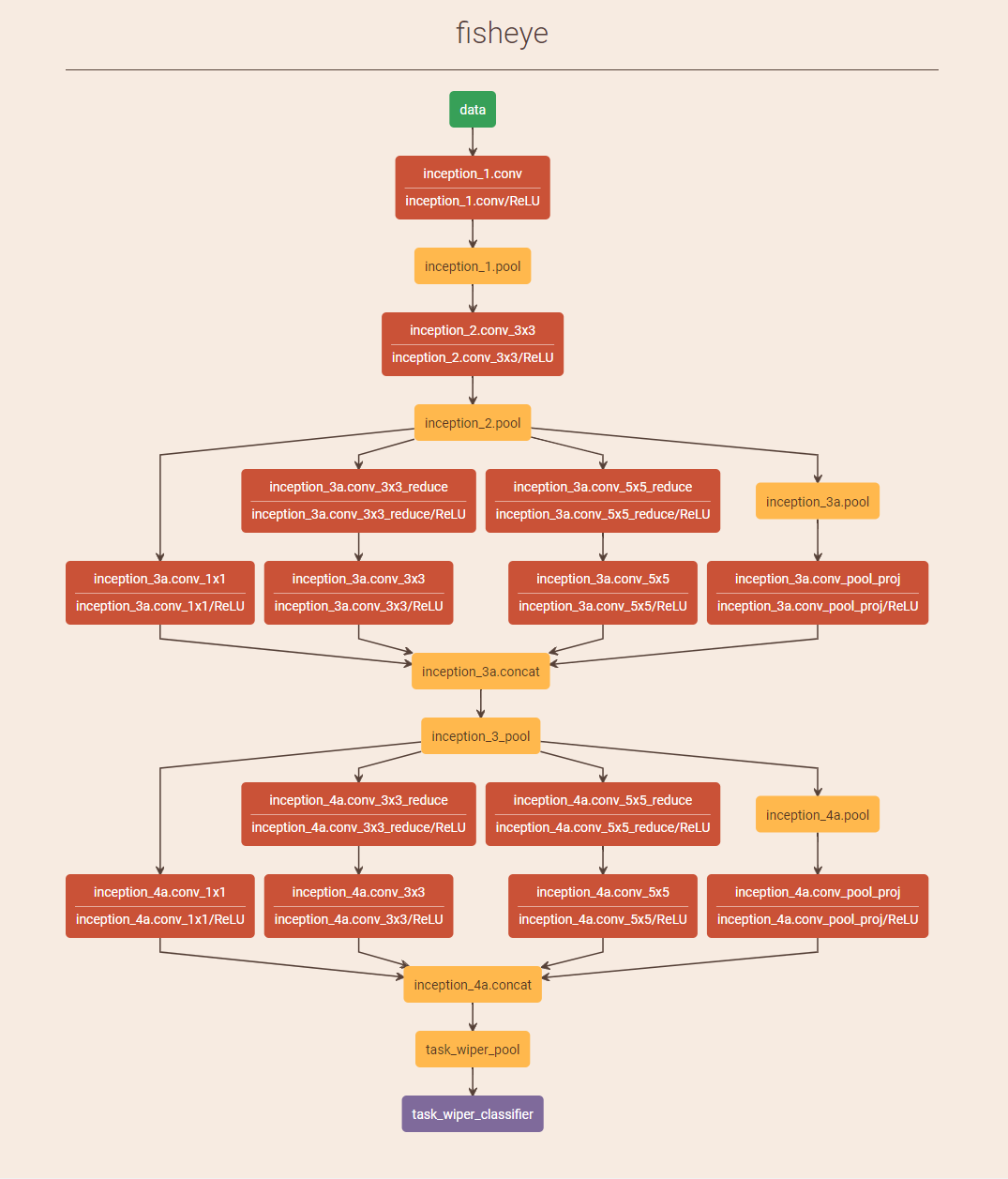

Keen Labs, a prominent cybersecurity research group, developed experiments to test the Tesla autopilot’s lane-recognition tech.

They discovered that Tesla’s autopilot would detect a lane where there were just three inconspicuous tiny squares strategically placed on the road.

“Based on the research, we proved that by placing interference stickers on the road, the Autopilot system will capture these information and make an abnormal judgement, which causes the vehicle to enter into the oncoming lane,” Keen Labs wrote in a blog post.

Tencent Keen Security Lab also found that this would activate the windshield wipers.

Tesla dismissed this critique and commented that displaying an image on a TV placed directly in front of the windshield of a car is not a real-world situation that drivers would face. And even if it did, it wouldn’t bea safety or security issue.

It’s not the first time Keen Labs has exposed potential problems in the safety and security of Tesla’s digital systems. Back in 2016, the hackers discovered a way to remotely take control of Tesla’s brakes.